1. Why do we need an AI Chatbot based on documents?

Most organizations already have large amounts of knowledge stored in documents, such as policies, technical specifications, reports, manuals, contracts, and internal guidelines. However, accessing and using this knowledge efficiently remains a challenge.

The limitations of traditional search:

Traditional keyword-based search has several fundamental limitations:

- It only works well when users already know the exact keywords to search for.

- It does not understand context or meaning, making natural or high-level questions difficult.

- Answers often require manually reading multiple documents or sections to piece together the full picture.

- Important information can be missed if wording differs from the search query.

Why is a general AI Chatbot not enough?

While general AI chatbots are powerful, they are not designed to work with private organizational knowledge:

- They are trained on public data and have no access to your internal documents.

- When uncertain, they may still generate confident but incorrect responses.

- They cannot reliably cite sources, making answers hard to verify or audit.

=> A document-based AI chatbot transforms documents into a trusted source, making them instantly accessible through natural conversation. Here is a suggestion to build a document-based AI chatbot using Azure services.

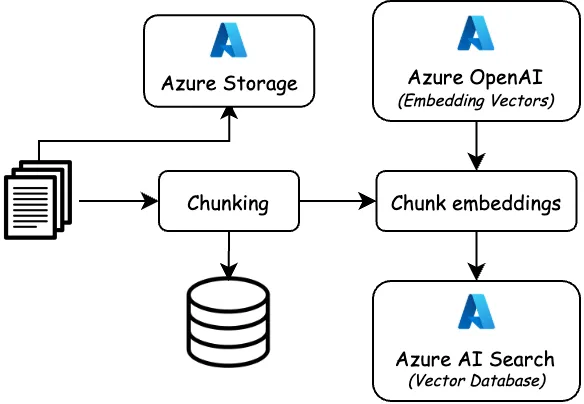

2. Document Ingestion & Indexing

-

Azure Blob Storage: store uploaded documents (PDF, DOCX, images).

-

Azure AI Document Intelligence: extract text, layout, and tables from documents (OCR).

-

Azure OpenAI Embeddings: convert document chunks into vectors.

-

Azure AI Search: index chunks using vector search or hybrid (vector + keyword) search.

=> This layer is responsible for transforming raw documents into structured, searchable knowledge that the AI can reliably retrieve.

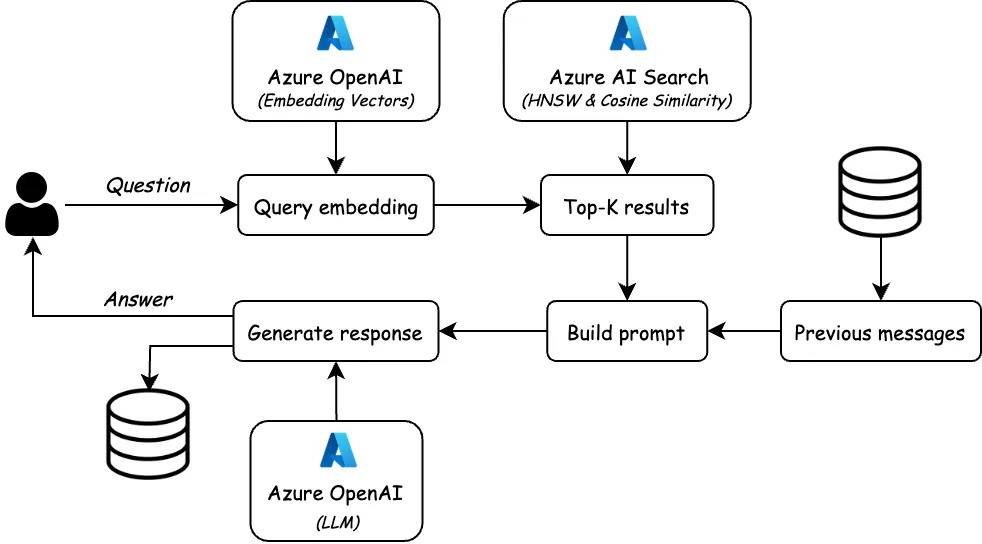

3. Retrieval-Augmented Chat

- Azure Container Apps: host the chatbot backend & frontend.

- Azure OpenAI Embeddings: convert question into vectors.

- Azure AI Search: retrieve the most relevant chunks.

- Azure OpenAI LLM: generate answers strictly from retrieved content.

=> This layer handles user interaction and generates grounded answers based on retrieved document content.