Deep learning refers to algorithms based on artificial neural networks (ANNs), which in turn are based on biological neural networks, such as the human brain. Due to more labeled data, more compute power, better optimization algorithms, and better neural net models and architectures, deep learning has started to supersede humans when it comes to image recognition and classification.

Work is being done to obtain similar levels of performance in natural language processing and understanding. Deep learning applies to supervised, unsupervised and reinforcement learning. According to Jeff Dean in a recent interview, Google have implemented it in over one hundred of their products and services including search and photos.

In this article we briefly describe some of the more familiar deep learning frameworks including TensorFlow, Torch and Theano, providing an overview of common benchmarks, then including references so that the interested reader can compare the similarities and differences between them. We will use the terms deep learning and neural networks interchangeably.

TensorFlow is the newly open sourced deep learning library from Google. It is their second generation system for the implementation and deployment of large-scale machine learning models. Written in C++ with a python interface, it is borne from research and deploying machine learning projects throughout a wide range of Google products and services. Google and the open source community are constantly adding changes including releasing a version that runs on a distributed cluster.

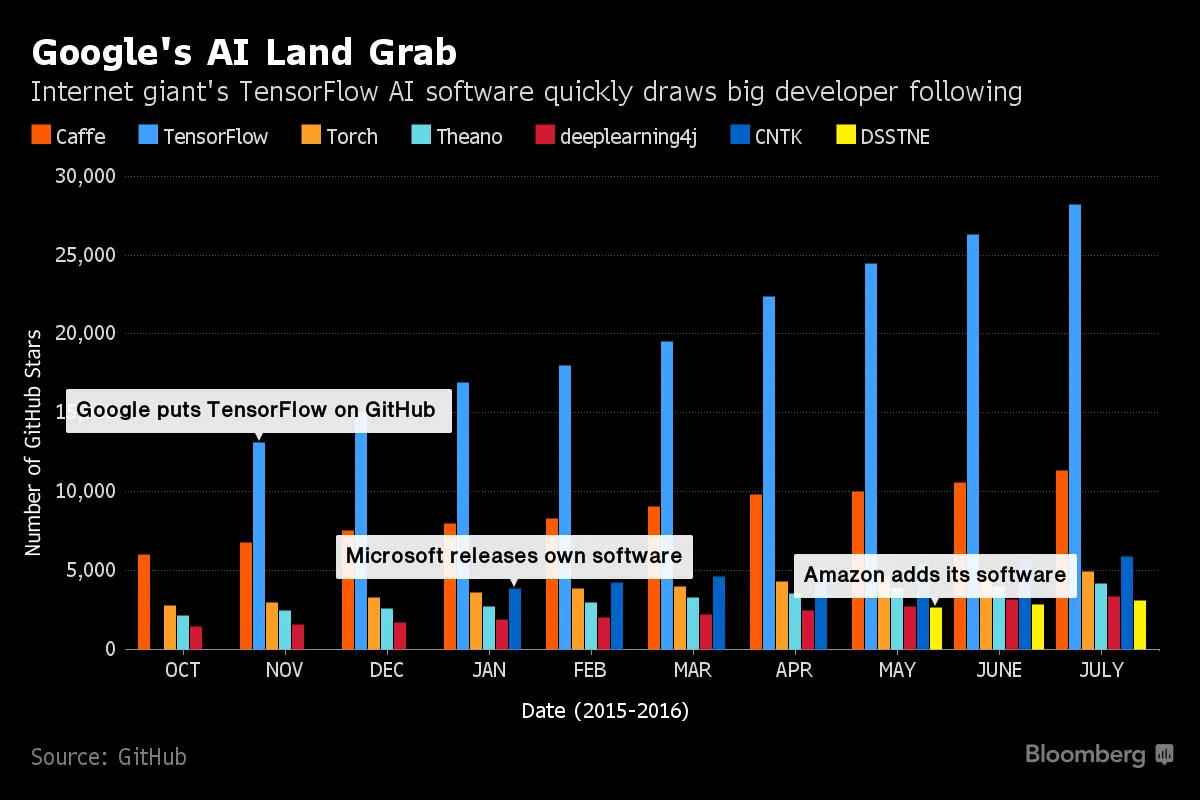

Figure 1 - Since its release in November 2015, TensorFlow has become the clear leader in open source deep learning frameworks .

Torch is a neural network library written in Lua with a C/CUDA interface originally developed by a team from the Swiss institute EPFL. At the heart of Torch are popular neural network and optimization libraries which are simple to use, while being flexible in implementing different complex neural network topologies. Finally, Theano is a deep learning library written in python and popular for its ease of use. Using Theano, it is possible to attain speeds rivaling hand-crafted C implementations for problems involving large amounts of data.

So what are the various metrics we can use to compare open source software libraries in general, and these deep learning libraries in particular? The most common ones are speed of execution, ease of use, languages used (core and front-end), resources (CPU and memory capacity) needed in order to run the various algorithms, GPU support, size of active community of users, contributors and committers, platforms supported (e.g., OS, single devices and/or distributed systems), algorithmic support, and number of packages in their library. Various benchmarks and comparisons are available here, here and here.

Of course, these libraries are not static, but rather dynamic, living repositories, constantly evolving as the user base adds to and modifies them. As Google report in their white paper, published to support the release of TensorFlow, “We will continue to use TensorFlow to develop new and interesting machine learning models for artificial intelligence, and in the course of doing this, we may discover ways in which we will need to extend the basic TensorFlow system. The open source community may also come up with new and interesting directions for the TensorFlow implementation.”

Over the course of 2015/2016, Google, IBM, Samsung, Microsoft, Nervana, Baidu and others all open sourced their machine learning frameworks. Suffice to say, there are many open source deep learning libraries out there for people to use. Frameworks familiar to researchers and developers in this space include Caffe, CuDNN, Deeplearning4J, CNTK and MXnet. In fact, there is such a plethora of machine learning libraries that many are beginning to ask how do we decide which ones to use, and should we start thinking about combining them to remove confusion and add efficiencies? This is an interesting space to be working in right now, with refinements being added seemingly every day providing a constantly evolving landscape.

It is certainly an interesting journey we are on as these developments help bring us towards the holy grail of artificial general intelligence, intelligence that can truly multitask just as biological intelligence can. I can’t help sometimes but to step back and watch in awe and wonder as the field of artificial intelligence unfolds and to contemplate the ramifications that go along with this progress.

August 2016

Update July 2017: New frameworks that have appeared in the interim include PyTorch, Caffe2 and Keras. TensorFlow is also being used extensively within Google as the following graph shows.